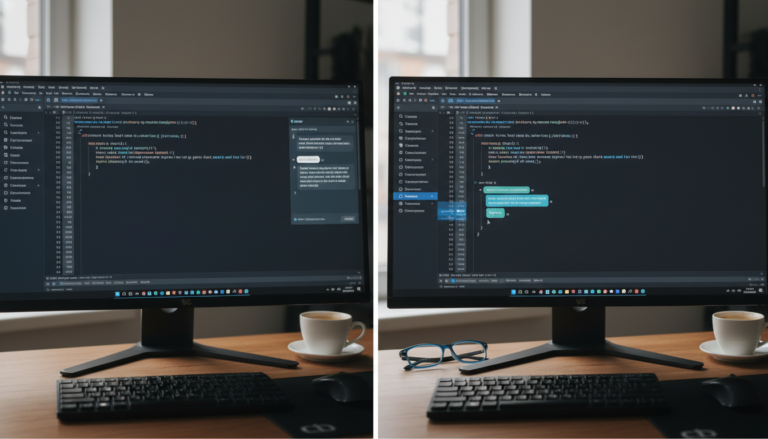

Remember the first time you used an AI coding assistant? It felt like magic, until it suggested a library that didn’t exist or tried to solve a simple loop with a 500-line recursion. We’ve all been there. You’re trying to innovate, but the tool is busy hallucinating a new version of React. The truth is, these models are not mind readers. They are highly advanced pattern matchers. To get the best out of them, you need to stop treated them like a search engine and start treating them like a very fast, very literal junior developer.

The Mental Shift: From Search Engine to Junior Partner

When we search Google, we use keywords. When we prompt an AI, we provide context. This transition is where most developers stumble. If you ask an AI to write a function to sort a list, you’ll get a generic response. If you tell it the specific constraints of your data, the memory limits of your environment, and the preferred naming conventions of your team, you get production-ready code. This is the heart of technology meets innovation.

Why Context is King

The context window is your most valuable asset. Think of it as the short-term memory of your pair programmer. If you do not fill it with relevant information, the AI will fill the gaps with guesses. Most of those guesses will be wrong. By providing clear definitions and existing code patterns, you reduce the entropy of the output. This is a core part of any modern tech guide on the subject.

The Secret Sauce: Role-Play and Personas

It sounds silly, but it works. Telling an AI to act as a Senior Systems Architect or act as a Python performance expert changes the weights of the tokens it generates. It shifts the model’s tone and the complexity of the solutions it provides. If you want robust, secure code, explicitly ask it to prioritize security and edge-case handling before it writes a single line.

Example: The Architect Prompt

Instead of saying build an API, try saying: Act as a Lead Backend Developer. Design a RESTful API using FastAPI that follows SOLID principles. Ensure that you include type hinting and Pydantic validation for all requests. Notice the difference? You are setting the guardrails. For more on these techniques, check out the official OpenAI Prompt Engineering guide.

Breaking Down the Monolith: Modular Prompting

One of the biggest mistakes coders make is asking the AI to build a whole feature at once. This leads to timeouts, truncated code, and logic errors. Instead, use a modular approach. Break the problem into small, digestible chunks. This isn’t just good for the AI, it is good engineering practice. Build the data model first. Then the helper functions. Then the logic. Then the tests.

The Chain of Thought Technique

Ask the AI to think step-by-step. This forces the model to layout the logic before it commits to the syntax. When the model explains its reasoning, it is significantly less likely to make a logical error. You can even ask it to critique its own plan before writing the code. This is how you use innovation to streamline your workflow.

Debugging: The Art of the Stack Trace

Don’t just say fix this. When an error pops up, paste the entire stack trace along with the relevant block of code. Better yet, explain what you’ve already tried. AI is incredibly good at spotting the semicolon you missed or the logical flaw in your filter function, but it needs to see the crime scene to solve the murder. Tools like GitHub Copilot thrive on this kind of specific interaction.

Security and Ethics: Don’t Leak the Sauce

While we love our AI buddies, remember that they are external services. Never, ever paste API keys, private credentials, or proprietary business secrets into a prompt unless you are using an enterprise-grade, locally-hosted instance. Always sanitize your code before you ask for a refactor. A good developer knows that safety is just as important as speed.

The Future of Human-AI Collaboration

We are moving into an era where the skill of a developer isn’t just defined by how well they can memorize syntax, but by how well they can orchestrate tools. Prompt engineering is the new literacy for the digital age. By mastering these techniques, you aren’t just coding faster, you are thinking more clearly about the systems you build. The machines are fast, but you are the one with the vision.

Start small, iterate often, and don’t be afraid to tell your AI it is being a bit dense. It won’t take it personally, and your codebase will thank you.